Immediate Recognition and Response

With the advent of Artificial Intelligence (AI), platforms have started automating the monitoring of NSFW (Not Safe For Work) content where they offer instant detection and then processing capabilities. Contemporary AI systems can analyze and moderate content nearly in real time, which means significant decrease in the time occur from detection to action. For example, advanced technology such as machine learning can now scan through thousands of images or hours of video in a single second, to deliver a perfect, highly relevant and personalized experience. A recent benchmarking study discovered an AI system that can read 1,000 images a second with over 94% accuracy!

Improving Accuracy with Deep Learning techniques

Deep learning is the contributing factor in improving the accuracy of AI in NSFW content moderation. Since these models were trained on large datasets, they can detect anything between explicit (porn) imagery to relatively borderline/moderate NSFW content even in text. Admittedly, the use of convolutional neural networks (CNNs) and recurrent neural networks (RNNs) has gone a long way to help train AI to understand context and some of the nuances that are missed by some of the simpler rule-based systems. A major video sharing platform is using AI to automatically identify and filter out NSFW content from live streamed videos with as high as 98% accuracy.

Immediate Responsiveness to New Problems

Why AI matter in NSFW moderation and Real-time Adaptation AI filters can adapt to new forms of inappropriate content faster than human moderators This is very necessary for making moderation systems any effective. During a major sporting event in 2023, an AI model monitored and updated in real-time identified and moderated NSFW content that appeared spontaneously on live broadcasts, as soon as it happened.

Tradeoff between speed and sensitivity

An effective AI moderation requires to strike a balance between speed and sensitivity. Though AI can process content faster, balancing in the under-filtering and over-filtering of content is an enormous challenge. To this end, as you may know, developers fine-tune their AI systems to strike a balance between making the least false positives(safe content classified as NSFW) and being able to catch the most false negatives(NSFW content not filtered out). AI models are honed through repeated training and feedback loops, resulting in their ability to make better and more accurate decisions with barely a hitch in processing time.

Privacy and Ethical Problem Statement

However, when it comes to deploying AI for real-time NSFW content moderation, privacy and ethical issues must come to the forefront. It should be guaranteed that the AI systems protect user privacy and are transparent. You have to perform regular audits and updates for trust and comply with international privacy regulations.

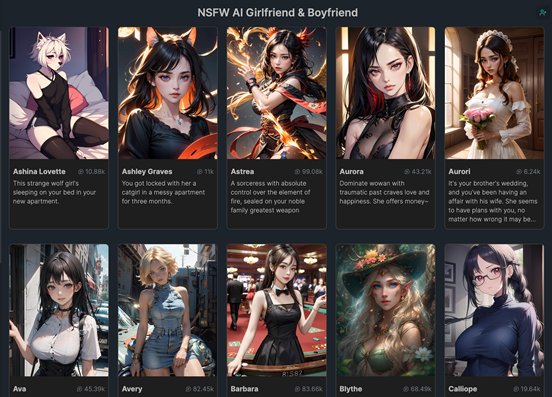

For more on how AI is incorporated into NSFW content management, visit here: nsfw character ai.